Large Wireless Model (LWM): A Foundation Model for Wireless Channels

Submitted to the IEEE International Conference on Machine Learning for Communication and Networking (ICMLCN), 2025

Sadjad Alikhani, Gouranga Charan, Ahmed Alkhateeb

Wireless Intelligence Lab, Arizona State University, USA

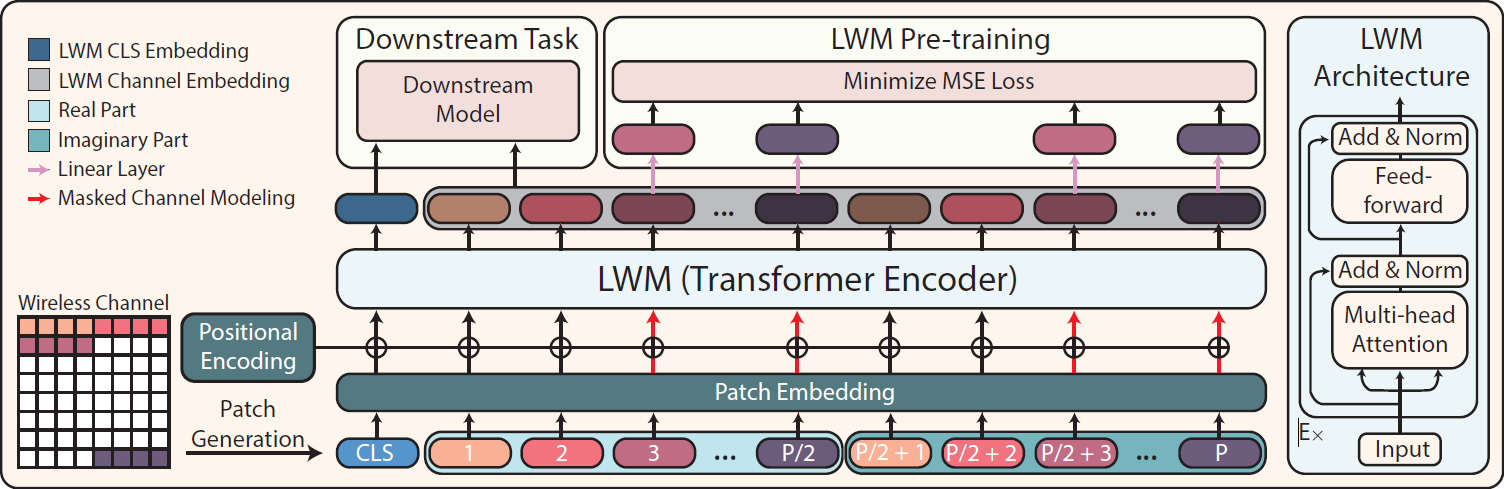

This figure depicts the offline pre-training and online embedding generation process for LWM. The channel is divided into fixed-size patches, which are linearly embedded and combined with positional encodings before being passed through a Transformer encoder. During self-supervised pre-training, some embeddings are masked, and LWM leverages self-attention to extract deep features, allowing the decoder to reconstruct the masked values. For downstream tasks, the generated LWM embeddings enhance performance.

Abstract

This paper presents the Large Wireless Model (LWM)—the world’s first foundation model for wireless channels. Designed as a task-agnostic model, LWM generates universal, rich, contextualized channel embeddings (features) that potentially enhance performance across a wide range of downstream tasks in wireless communication and sensing systems. Towards this objective, LWM, which has a transformer-based architecture, was pre-trained in a self-supervised manner on large-scale wireless channel datasets. Our results show consistent improvements in classification and regression tasks when using the LWM embeddings compared to raw channel representations, especially in scenarios with high-complexity machine learning tasks and limited training datasets. This LWM’s ability to learn from large-scale wireless data opens a promising direction for intelligent systems that can efficiently adapt to diverse tasks with limited data, paving the way for addressing key challenges in wireless communication and sensing systems.

Pre-training Setup

The LWM pre-training setup is designed to learn task-agnostic, robust representations of wireless channels. It leverages a large and diverse dataset from the DeepMIMO project, encompassing over 1 million wireless channels from 15 scenarios, including O1, Boston5G, ASU Campus, and 12 DeepMIMO city scenarios. These scenarios vary in size and user density, ensuring the model captures a wide range of spatial and propagation characteristics. By pre-training on such diverse datasets, LWM learns universal features that generalize across tasks without being tied to any specific application.

Resources

You can download the pre-trained LWM model for fine-tuning and inference directly from the Hugging Face model repository. The repository provides the necessary files and instructions for seamless integration into various tasks.

Additionally, the interactive demo showcases two downstream tasks—Sub-6 to mmWave beam prediction and LoS/NLoS classification. It compares the performance of models using raw channels versus LWM embeddings, demonstrating the effectiveness of the pre-trained model in capturing complex wireless channel characteristics.

For more information you can refer to the LWM website.

Citation

S. Alikhani, G. Charan, and A. Alkhateeb, ‘Large Wireless Model (LWM): A Foundation Model for Wireless Channels’, arXiv [cs.IT]. 2024.

@misc{alikhani2024largewirelessmodellwm,

title={Large Wireless Model (LWM): A Foundation Model for Wireless Channels},

author={Sadjad Alikhani and Gouranga Charan and Ahmed Alkhateeb},

year={2024},

eprint={2411.08872},

archivePrefix={arXiv},

primaryClass={cs.IT},

url={https://arxiv.org/abs/2411.08872},

}