Vision-Position Multi-Modal Beam Prediction Using Real Millimeter Wave Dataset

IEEE Wireless Communications and Networking Conference (WCNC) 2022

Gouranga Charan1, Tawfik Osman1, Andrew Hredzak1, Ngwe Thawdar2, and Ahmed Alkhateeb1

1Wireless Intelligence Lab, ASU

2Air Force Research Lab

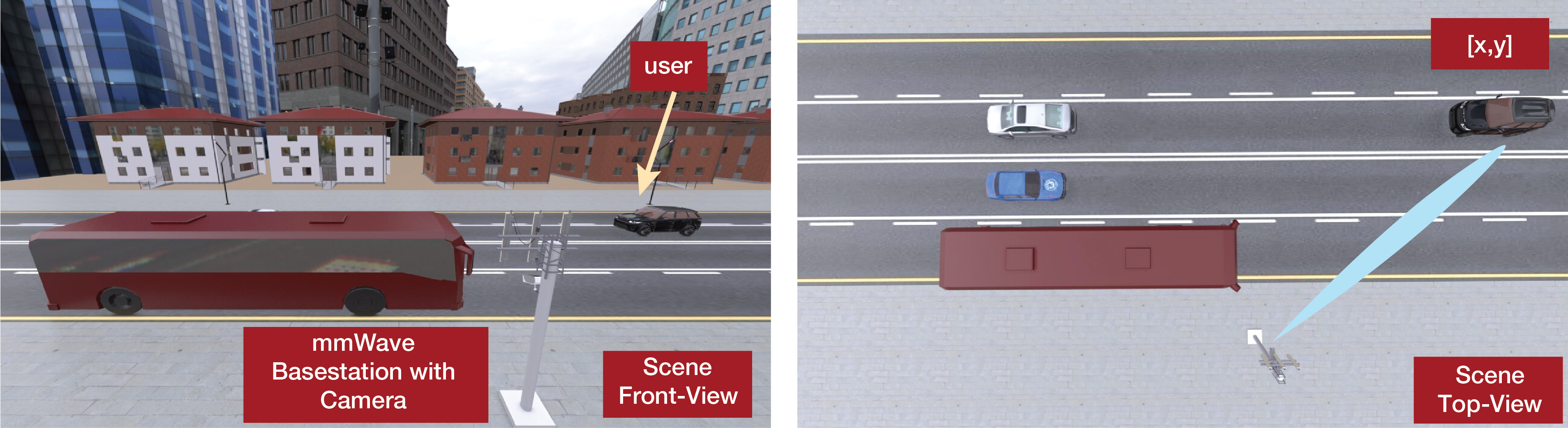

Given an image of the wireless environment or the position of the UE in the environment, can the mmWave/sub-THz basestation predict the optimal beam index? Our machine learning-based solution can leverage such additional sensing data for efficient mmWave/THz beam prediction.

Abstract

Enabling highly-mobile millimeter wave (mmWave) and terahertz (THz) wireless communication applications requires overcoming the critical challenges associated with the large antenna arrays deployed at these systems. In particular, adjusting the narrow beams of these antenna arrays typically incurs high beam training overhead that scales with the number of antennas. To address these challenges, this paper proposes a multi-modal machine learning based approach that leverages positional and visual (camera) data collected from the wireless communication environment for fast beam prediction. The developed framework has been tested on a real-world vehicular dataset comprising practical GPS, camera, and mmWave beam training data. The results show the proposed approach achieves more than ≈ 75% top-1 beam prediction accuracy and close to 100% top-3 beam prediction accuracy in realistic communication scenarios.

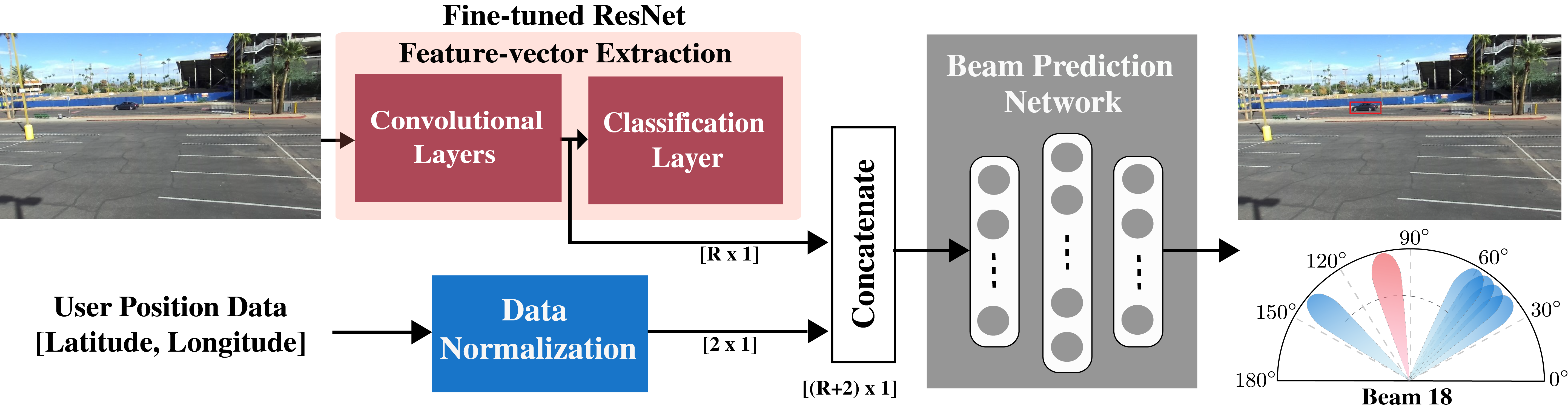

Proposed Solution

This figure illustrates the proposed multi-modal machine learning-based beam prediction model that leverages both visual and (not necessarily accurate)

position data for mmWave/THz beam prediction

Video Presentation

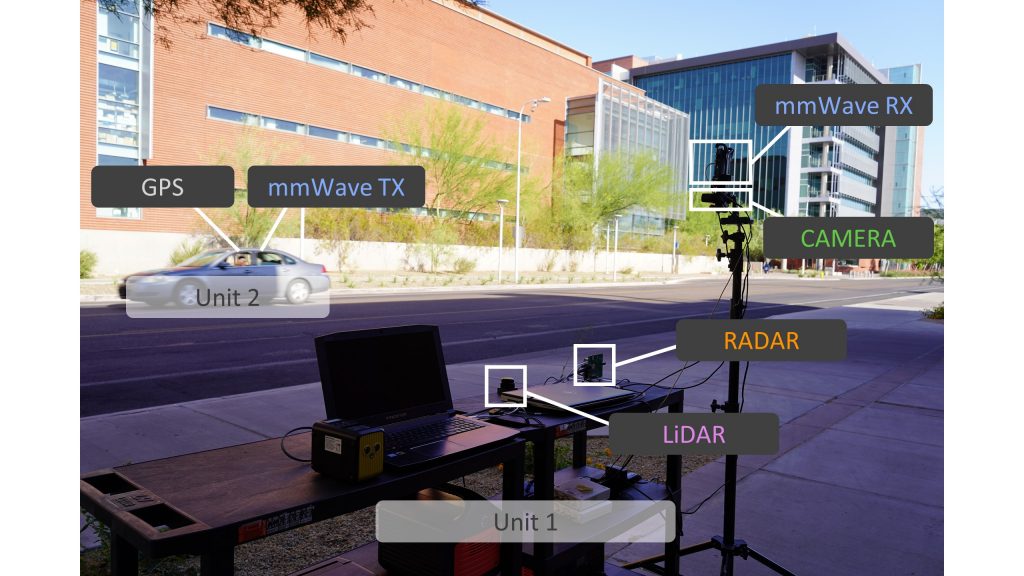

DeepSense 6G Dataset

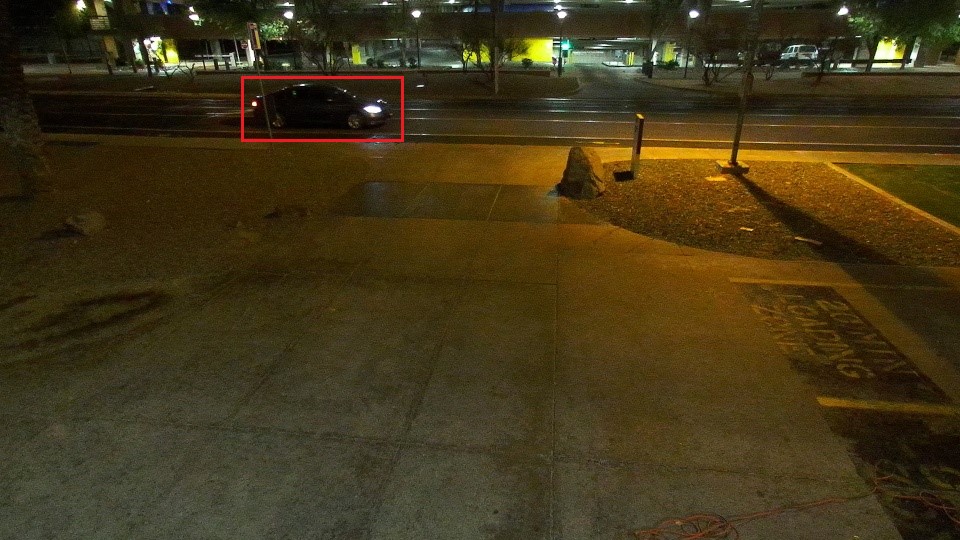

DeepSense 6G is a real-world multi-modal dataset that comprises coexisting multi-modal sensing and communication data, such as mmWave wireless communication, Camera, GPS data, LiDAR, and Radar, collected in realistic wireless environments. Link to the DeepSense 6G website is provided below.

Scenarios

In this beam prediction task, we build development/challenge datasets based on the DeepSense data from scenarios 5 and 6. For further details regarding the scenarios, follow the links provided below.

Citation

A. Alkhateeb, G. Charan, T. Osman, A. Hredzak, J. Morais, U. Demirhan, and N. Srinivas, “DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Datasets,” IEEE Communications Magazine, 2023.

@Article{DeepSense,

author={Alkhateeb, Ahmed and Charan, Gouranga and Osman, Tawfik and Hredzak, Andrew and Morais, Joao and Demirhan, Umut and Srinivas, Nikhil},

title={{DeepSense 6G: A Large-Scale Real-World Multi-Modal Sensing and Communication Dataset}},

journal={IEEE Communications Magazine},

year={2023},

publisher={IEEE}}

G. Charan, T. Osman, A. Hredzak, N. Thawdar and A. Alkhateeb, “Vision-Position Multi-Modal Beam Prediction Using Real Millimeter Wave Datasets,” In Proc. of IEEE Wireless Communications and Networking Conference (WCNC), 2022

@INPROCEEDINGS{Charan2022,

title={Vision-position multi-modal beam prediction using real millimeter wave datasets},

author={Charan, Gouranga and Osman, Tawfik and Hredzak, Andrew and Thawdar, Ngwe and Alkhateeb, Ahmed},

booktitle={2022 IEEE Wireless Communications and Networking Conference (WCNC)},

pages={2727–2731},

year={2022},

organization={IEEE}}